Tag: software-engineering

All the articles with the tag "software-engineering".

-

AI Encourages Mainstream Adoption of Formal Verification via LLM Assistance

• 1 min readLLMs improve formal verification, making it easier and poised for widespread use in software correctness.

Read more -

Gemini 2.5 Pro Empowers SMEs by Translating Tacit Knowledge into BPMN 2.0 Diagrams

• 1 min readGemini 2.5 Pro LLM captures SMEs' tacit knowledge and converts it into BPMN 2.0 process diagrams for better business workflows.

Read more -

Open-Weight Mistral Large 3 Model Advances Multimodal and Efficient LLM Inference

• 1 min readMistral Large 3 is a powerful open-weight LLM with 41B active params, supporting text, images, and low-precision inference.

Read more -

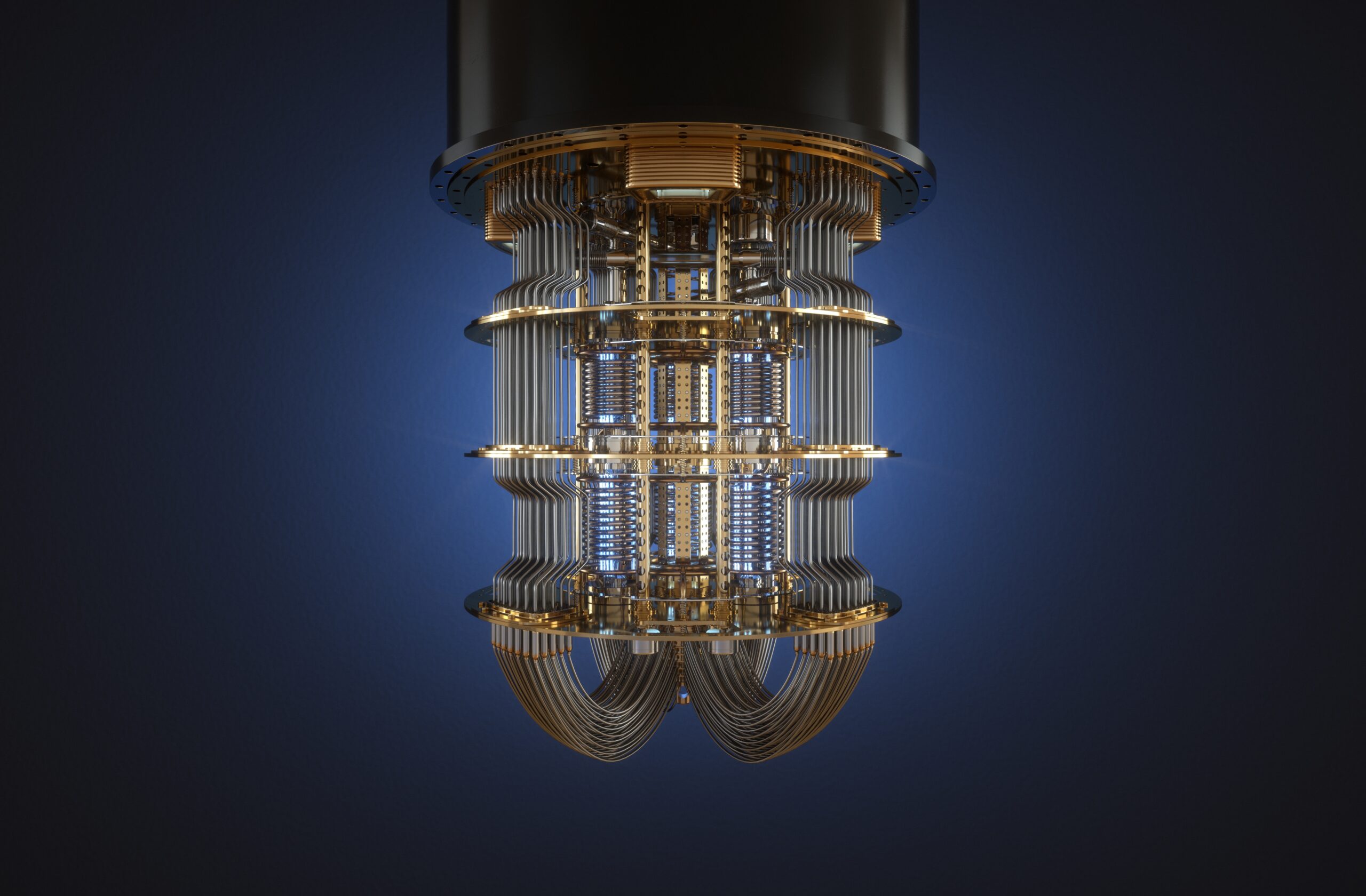

AI Techniques to Address Hardware-Software Challenges in Quantum Computing

• 1 min readResearchers apply AI methods to tackle hardware-software integration issues in practical quantum computing development.

Read more -

Emergence of Post-LLM Architectures for Next-Gen AI

• 1 min readPost-LLM architectures combine LLMs with new techniques to surpass current limitations and enable versatile AI.

Read more -

JFrog Launches Shadow AI Detection to Secure Enterprise AI Usage

• 1 min readJFrog's Shadow AI Detection offers enterprises control over unmanaged AI models and API calls in dev pipelines.

Read more -

DeepSeek-V3.2 integrates reasoning into tool use

• 1 min readDeepSeek-V3.2, released December 1, 2025, thinks while using tools, enabling real-time error recovery in coding workflows.

Read more -

OpenAI's 'Garlic' emerges as GPT-5.2/5.5 contender

• 1 min readOpenAI’s internal model Garlic, built on improved pretraining and bug fixes, may launch as GPT-5.2 or GPT-5.5 early next year.

Read more -

OpenAI's 'Garlic' Model Challenges Gemini 3 and Opus 4.5

• 1 min readOpenAI's new Garlic model outperforms Gemini 3 and Opus 4.5 in coding and reasoning, possibly launching as GPT-5.2 or 5.5.

Read more -

DeepSeek V3.2-Exp Slashes Inference Costs

• 1 min readDeepSeek’s new model cuts inference costs by 50% using sparse attention for long-context tasks.

Read more -

MIT’s Dynamic Computation Allocation for LLMs

• 1 min readMIT researchers enable LLMs to dynamically adjust computation for harder problems, boosting efficiency.

Read more -

Study Compares Prompt Styles Across Leading LLMs

• 1 min readNew research benchmarks prompt engineering across major LLMs, revealing trade-offs in accuracy, speed, and cost.

Read more